Project Details

Segmentation for Chinese corpora

Chinese corpora has always been a challenging subject for NLP development, because the linguistic aspect is completely different from the European languages.

Most European languages belong to the Indo-European language family, and the three largest families are Romance, Germanic, and Slavic. They are all languages based on alphabets.

Whereas the Chinese language is a character-based language.

For a Chinese corpora, it is impossible to treat it in the same way as we did with the other languages, the Chinese texts are not segmented with space, and since there is no space between Chinese words, we should take other measures for the preprocessing of the Chinese corpus.

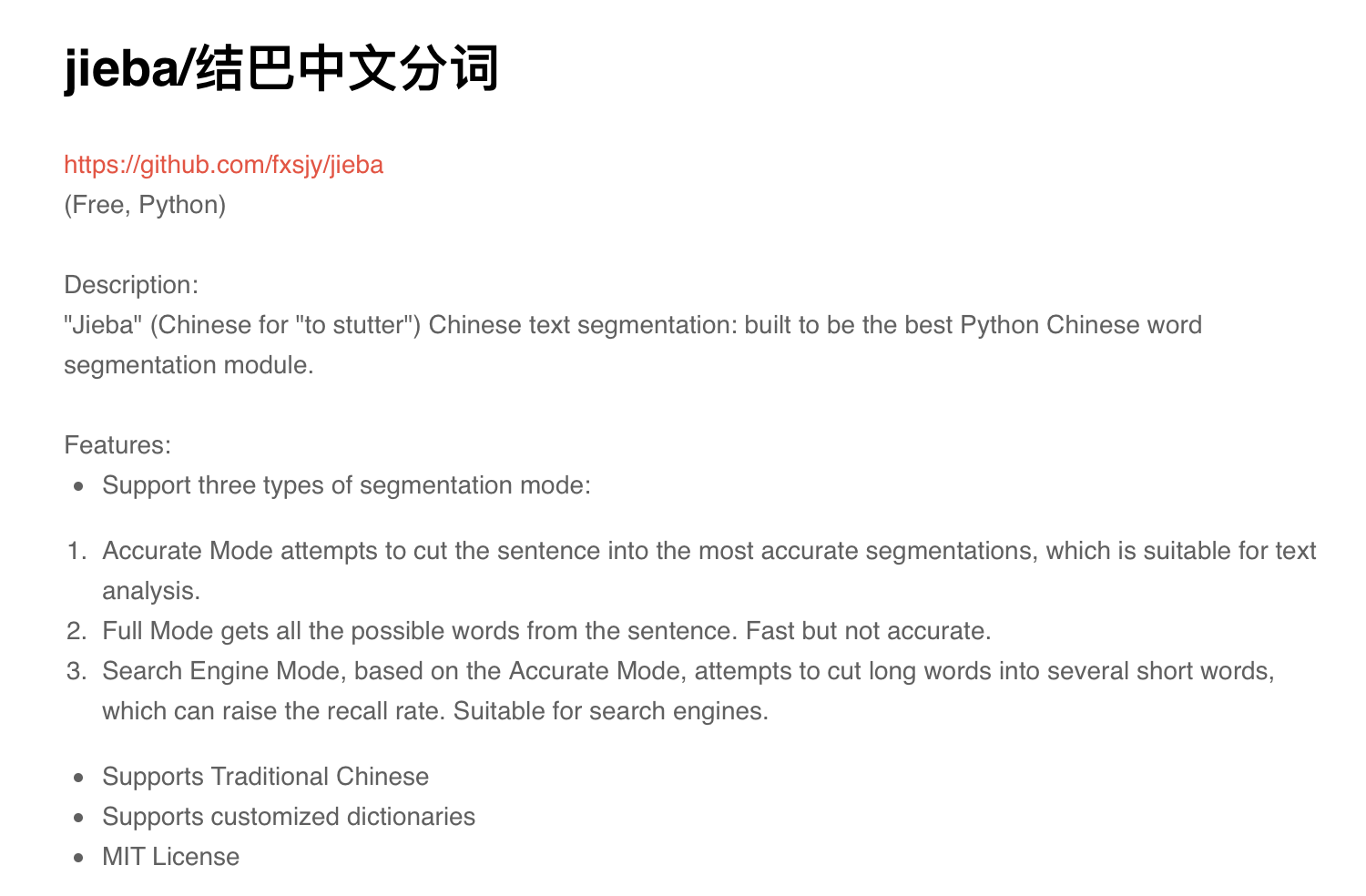

For the segmentation of the Chinese corpus, we can use the Jieba module of Python which was developed by MIT.

Thanks to this library, we are able to segment our Chinese corpus and create bigrams with a small script in Python3.

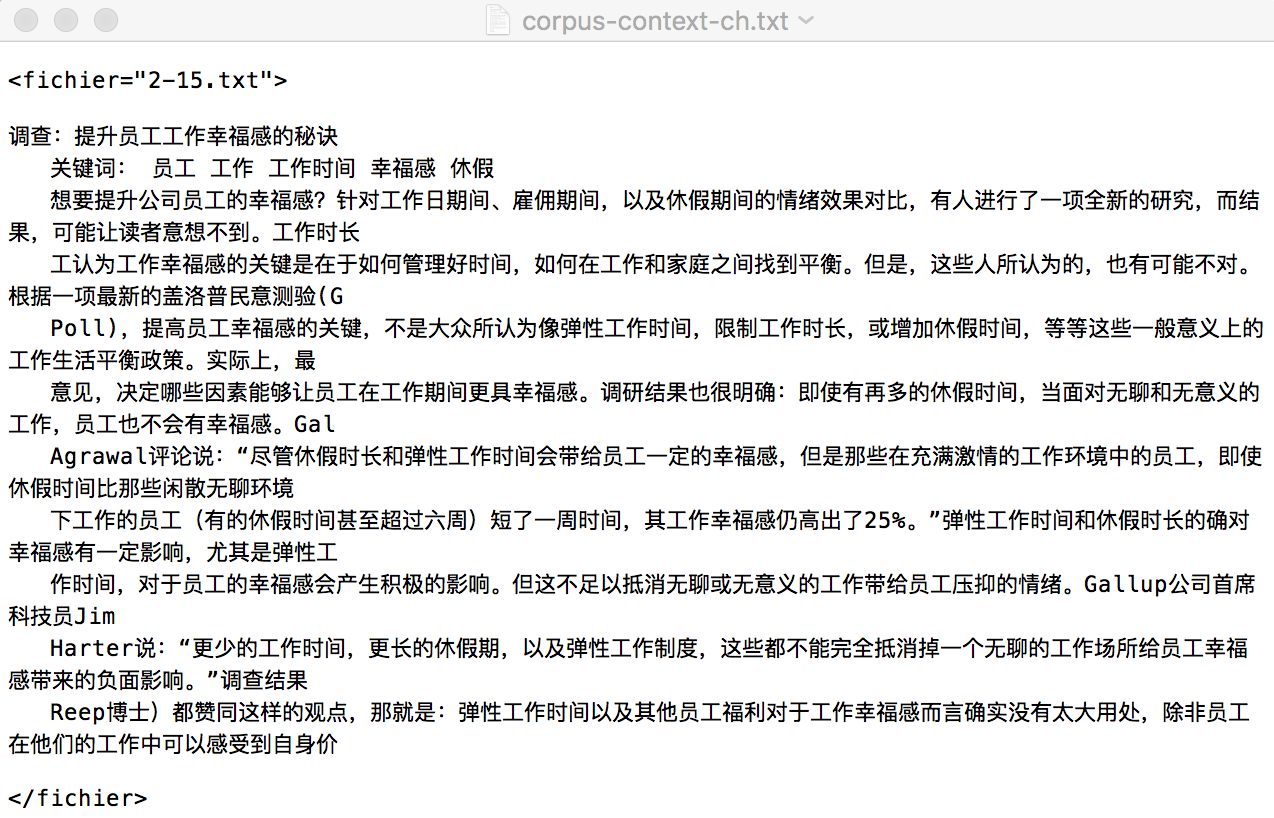

Here is the input of our Chinese text :

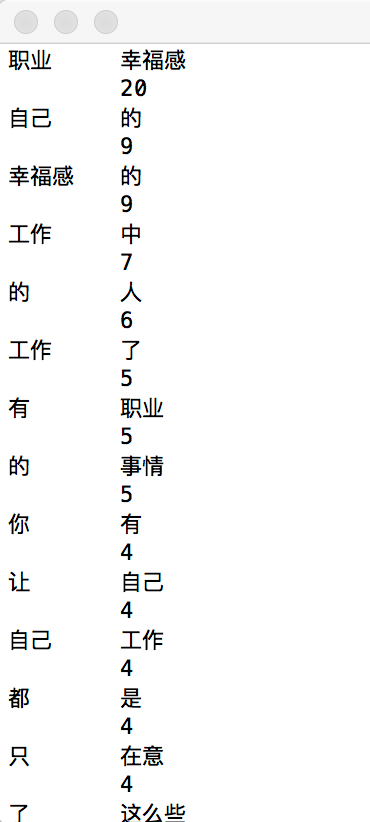

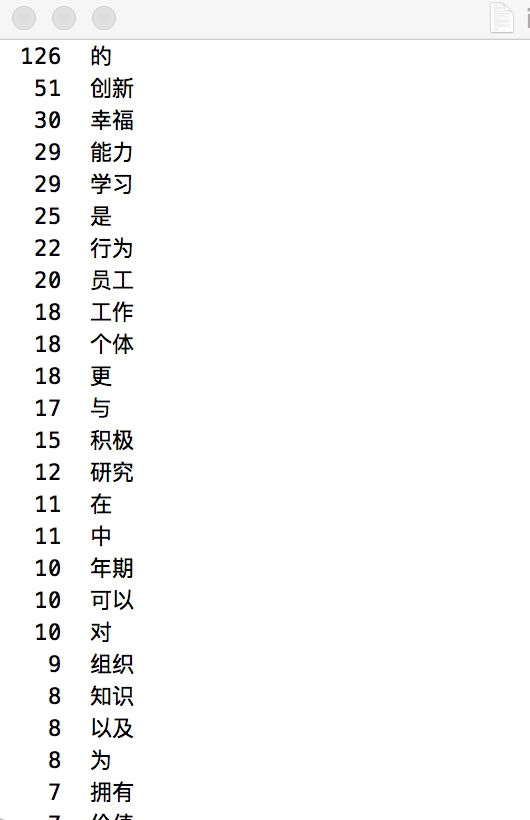

And here is the out result of bigrams created from the Chinese corpora :

With a little bit of sorting, we can get the top tokens and top bigrams from this corpora :

For more information about the project, please visit :

http://www.tal.univ-paris3.fr/plurital/travaux-2018-2019/ppe-s1/05/Bonheur_Resultat/Resultat.html